Scripts are the meat and juice of system administrators. Being able to make use of an extensive set of scripts suitable for different tasks is essential for a sysadmin. But how do you actually do the script management?

A number of different elements play a part in doing proper script management.

Structure Your Scripts

Most script development starts with a problem that needs urgent solving. You're often given little to no time afterwards to optimize the script. Unfortunately, this can result in scripts that are difficult to reuse in cases where a similar but slightly different problem occurs.

One solution is to separate your problem configuration from the problem-solving logic. This will allow you to reuse your scripts in different environments in the future.

You can accomplish this in Python with separate configuration files. You can parse configuration files with the help of a module called ConfigParser. It's dead simple to use. A sample configuration file looks like this:

[network]

host:192.168.1.1

You can then use this in your Python scripts.

import ConfigParser

config = ConfigParser.ConfigParser()

config.read("/home/user/config.ini")

host = config.get('network', 'host')

... continue further action based on host

Do Script Management

Structuring your scripts is only one part of the equation. How do you manage them?

Even if you're the only one managing the scripts, it still makes sense to add them to a central version control system. This allows you to review all the changes you made during the lifetime of a script, which gives you the potential to go back to an earlier version if something doesn't work out as expected.

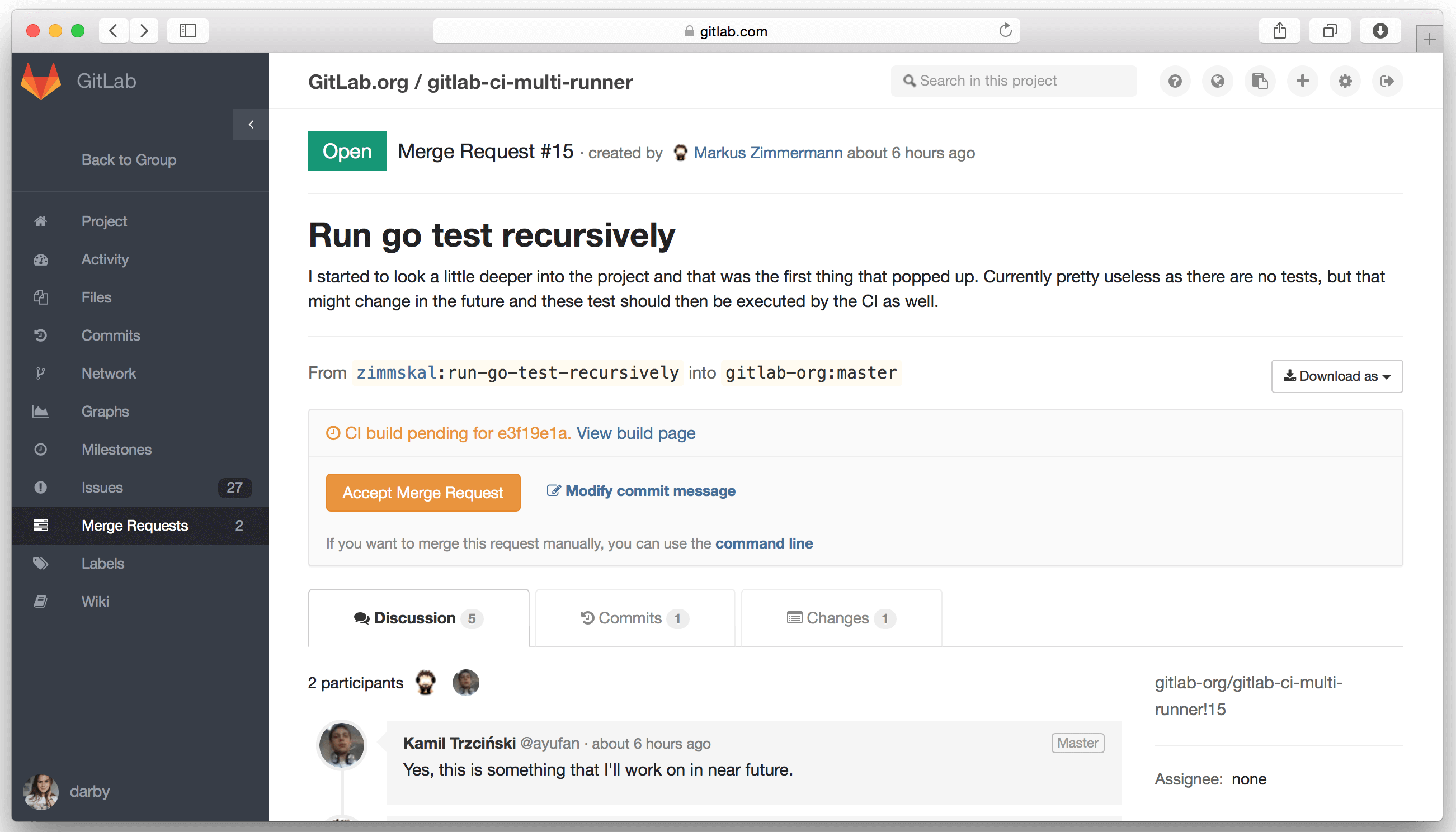

One of the well-known public code repositories is GitHub. Did you know you can host your own repository, similar to GitHub? It's called GitLab.

With GitLab you can do Git repository management, code reviews, issue tracking, activity feeds and wikis.

The community edition is free to use, while the enterprise edition requires a paid license.

Choose the Optimal Time for Running Your Scripts

In order to optimize the performance of your scripts, it's important to pick the right time to start them. For example, it's probably not a good idea to start a large file transfer script if your backup scripts are scheduled to kick in at around the same time. It's also best to postpone large database operations until after business hours. Otherwise, your colleagues using the actual data from the database might come knocking at your door.

To choose the best time to start running your script, you'll need to know when the different schedulers start doing their thing. Avoid disk-intensive scripts when you're doing backups, and avoid number-crunching scripts when a job is running that requires a lot of CPU cycles.

Measuring the Performance of Scripts

The load (CPU, network, memory) that you put on a system can be measured via different means. A flexible network monitoring tool, for example WhatsUp Gold, provides a graphical overview of the used resources and can raise alarms when something goes beyond the foreseen resource limits.

You can also use verbose output of one of the commands in your script to measure its performance.

As an example, let's focus on the use of rsync within a script. You can use "-v -v" (that's twice the verbose option) to get output on what the rsync command is actually doing and check for errors. But you can also use the verbose output (-v) to check whether the transfer performance is as expected.

rsync -v -r source destination

sent 498 bytes received 174 bytes 448.00 bytes/sec

The "bytes/sec" here is an important indicator of whether your script is running smoothly.

Note: If you're not sure if a problem is caused by disk speed or network latency, you can use iPerf to verify bandwidth loss etc. and possibly exclude the network as a cause.

You can also combine rsync with SSH. It might be a little slower (because of the overhead) but gives you the additional (and in most cases necessary) benefit of secure file transfers. If you are going to use rsync over SSH, then you should definitely consider a public-private key setup. That way you won't have to log in interactively (username/password) each time.